Data Integrity and Normalization

Introduction To Data Integrity And Normalization

Introduction:

In this article, you will learn, what Data Integrity is and Database Normalization.

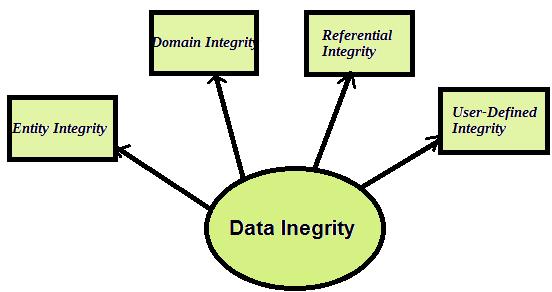

Data integrity refers to the global validity and certainty of data. It is an analytical condition to the design, performance, and usage of all system which stores, processes or recover data. This can be marked by the absence of adjustment between two information or between two updates of a data record. Data integrity can be managed through the various use method error checking techniques and validation procedures.

The following categories of the data integrity exist with each RDBMS:

- Entity Integrity: Entity integrity defines a row like a unique entity for a fixed table. Entity integrity applies the attribute of the modifier column or the primary key of a table.

- Domain Integrity: Domain integrity is the authority of the data for a given column. You can accomplish the domain integrity by the data type, pattern or the size of the possible values.

- Referential integrity: This constraint is described between the two tables and it is used to control the flexibility among the rows. You can't delete or change a value from a table if the record has related records. You can't enter a value in the foreign key field of the table, which doesn't exist in the primary key of the primary table. However, you can insert a Null value in the foreign key but, define that the data is dissimilar.

- User-Defined Integrity: Business laws may dictate that when a particular action appears, further actions should be prompted.

Database Normalization

Normalization is a technique to formulate the data in the database. This is used to avoid data redundancy, insertion anomaly, update anomaly & deletion anomaly.

Database normalization is the process of efficiently organizing the data in a database.

There are two reasons of the normalization process:

- Eliminating redundant data: Eliminating redundant data is used to store the same item in more than one table.

- Ensuring data dependencies makes sense: It contains only storing the related item in a table.

Normalization is a series of guidelines that acts as a helpful guide for you in making a good database structure. These guidelines are divided into the normal forms-

First Normal Form (1NF)

Second Normal Form (2NF)

Third Normal Form (3NF)

The objective of the normal forms is to create the database structure so that it is submitted with the rules of first normal form, then second normal form, and finally third normal form.

- First Normal Form (1NF): This is used to discard the duplicate column in the table. 1NF rows contain the duplicating group of information. Each group of a column must have different value and many columns cannot be used to fetch the equal row.

- Second Normal Form (2NF): In the 2NF, there must be any limited dependency on the primary key of any column.

- Third Normal Form (3NF): In the 3NF, all non-primary field depends on the primary key.

Summary:

Thus, we learned that data integrity allows the various method of error checking techniques and the validation procedures. The database normalization is used to organize the data.