Load Balancing in Cloud Computing

Cloud computing is the next generation of computing. Cloud computing technology is growing rapidly and the demands of the clients are also growing rapidly for more services and better results. IT industries are growing each day and with this growing the need for computing and storage resources. Data and information in large quantities are generated and exchanged over the secure or unsecured network which further necessitates the need for more and more advanced computing resources. Organizations, to make the most of their investment, are opening their base of operation to newfound virtualization technologies like Cloud computing. Now a day’s people can easily have everything they need on the cloud. Cloud computing provides resources in abundance to the client on their demand. The demanded resources may be software resources or hardware resources. The architectures of cloud computing are distributed as well as parallel and it serves the needs of multiple clients at the same time. There are multiple Processors engaged in cloud architecture. Clients in a distributed environment randomly generate a request in any processor in the network. The major drawback of it is associated with the assignment of tasks. The unequal assignment of the task to the processor creates imbalance i.e., some of the processors are heavily overloaded and some of them are underloaded. The main objective of load balancing is to transfer the load from overloaded process to an underloaded process. Load Balancing is very essential for efficient operations in a distributed environment. To achieve better performance, minimum and fast response time and high resource utilization we need to transfer the tasks between different nodes in the cloud network. The load balancing technique is used to distribute tasks from overloaded nodes to underloaded or idle nodes.

Load Balancing in Cloud Computing

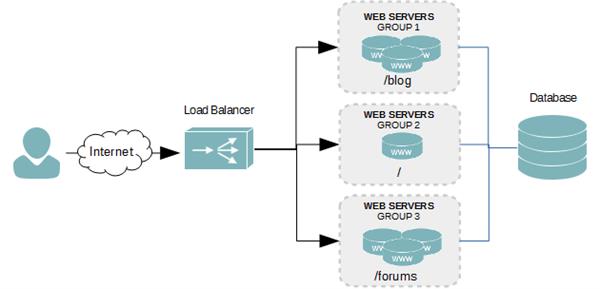

Cloud Load balancing is basically the process of distributing or dividing the workloads and different computing resources across one or more available servers. This kind of distribution ensures that maximum throughput in a minimum response time. The workload is divided among two or more servers, hard drives, network interfaces or other different computing resources, which helps to enable better resource utilization and improves system response time. Thus, for a website with high traffic rate, effective use of the cloud load balancing can ensure better business continuity. The common objectives of using load balancers are:

- To maintain system firmness.

- To improve system performance.

- To protect against system failures.

Cloud providers like Amazon Web Services (AWS), Microsoft Azure and Google offer cloud load balancing to facilitate easy distribution of workloads. For exp: Amazon Web Services (AWS) offers Elastic Load balancing (ELB) technology to distribute traffic among Elastic Compute Cloud (EC2) instances. Most of the Amazon Web Services (AWS) powered applications have Elastic Load Balancers (ELBs) installed as the key architectural component. Similarly, Azure’s Traffic Manager allocates its cloud servers’ traffic across multiple data centers.

Working of Load Balancing

Actually Load does not refer to only the website traffic but it also includes the CPU load, network load and memory capacity of every server. A load balancing technique always makes sure that each and every system connected to the network has the same amount of workload at any instant of time. This ensures that neither any of them is excessively over-loaded, nor under-utilized.

The load balancer always distributes data depending upon how busy each server or node is a particular time. In the absence of a load balancer, the client must wait while his process gets processed, which might be too tiring and demotivating for him and it is not recommended at any point in time. Various types of information like jobs waiting in the queue, CPU’s processing rate, job arrival rate, etc. are exchanged between the processors during the load balancing process. Failure in the right implementation of the load balancers can lead to serious problems, and data getting lost is one of them.

Different companies may use different load balancers and multiple load balancing algorithms like static and dynamic load balancing. One of the most commonly used methods is Round-robin load balancing.

It forwards the client requests to each connected server in turn. On reaching the end, the load balancer loops back and repeats the list again. The major benefit is its ease of implementation. The load balancers check the system heartbeats during set time intervals to verify whether each node is performing well or not.

Hardware vs. Software Load Balancing

Traditional load balancing solutions solely relied on the proprietary hardware basically available or situated in a data center, and were require a team of highly sophisticated IT personnel all the time to install, tune, and maintain the system. Only large companies with great IT budgets can realize the benefits of improved performance and reliability. In the age of cloud computing, hardware-based solutions have serious drawbacks: they cannot support cloud load balancing, because the cloud infrastructure vendors typically do not allow the customer or proprietary hardware inside their working environment.

Luckily, software-based load balancers can deliver the performance and reliability benefits of hardware-based solutions at a much lower cost. Because they run on affordable and easy to obtain hardware, they are affordable even for smaller companies. Software-based load balancers are ideal for cloud load balancing, as they can easily run in the cloud-like any other software application or package.

Advantages of Load Balancing

(I) High Performing applications

Cloud load balancing techniques, unlike their traditional and old counterparts, are not much expensive and are very easy to implement. An organization can make their client applications work faster and deliver much better performances, that too at very little cost.

(II) Increased scalability

Cloud balancing takes the help of the cloud’s scalability and agility to maintain website traffic. By using effective and productive load balancers, you can easily match up with the increased user and network traffic and it becomes easy to distribute it among various servers or network devices. It is especially important for e-commerce websites, who deals with thousands of website visitors every second. During the time of sale or other promotional offers, they need very effective and efficient load balancers to distribute the workloads.

(III) Ability to handle sudden traffic spikes

A normally running University site can completely go down during any result declaration. This is because too many requests can arrive at the same time. If they are using cloud load balancers, they do not need to worry about such traffic surges. No matter how great the request is, it can be wisely divided and distributed among different servers for generating maximum results in lesser response time.

(IV) Business continuity with complete flexibility

The basic objective of using a load balancer is to save or protect a website from sudden outages. When the workload is distributed among various servers or network units, the performance of the system remains intact if one node fails during processing, the burden can be shifted to another active node. Thus, with the increased load of data, scalability and different other features load balancing can easily handle website or application traffic.

Conclusion

Cloud Computing has widely being adopted by the industry, though there are many subsisting issues like server consolidation, Load balancing, Energy Management, Virtual Machine Migration, etc. which have not plenary addressed. Central to these issues is issue of Load Balancing that is required to distribute the excess dynamic local workload evenly to all the nodes in the whole cloud to achieve a high utilized gratification and resource utilization ratio. Load balancing techniques that have been studied mainly fixate on reducing overhead, accommodation replication time and ameliorating performance, etc., but none of the techniques have considered the execution time of any task at the run time. Therefore, there is a desideratum to develop such a load balancing technique that can ameliorate the performance of cloud computing along with maximum resource utilization.